Challenge powered by Oxylabs

Parse The Page: prepared by Karolina Šarauskaitė, Python Developer & Squad Lead at Oxylabs.

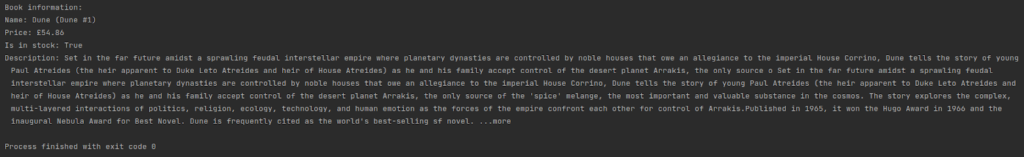

Try out an IT role of software developer: Don’t wait, go ahead and give it a try right now! Karolina from Oxylabs has created a very simple task, designed for beginners. The steps are described in great detail, and you can even see a possible solution. So don’t wait any longer! There will be two winners who will receive a career consultation with a recruiter from Oxylabs!*

Here’s what you need to do:

- Read the instructions provided below.

- Complete the task following the instructions.

- Once you’ve completed the task, take a screenshot of your solution.

- Return to this page.

- Submit your task solution and register by May 31st. You can find the registration form at the bottom of the page.

- On June 4th, two winners will be chosen randomly to receive consultations and announced here on the page.

- And remember, the most important thing is to try. You can do it!

Good luck!

*Only those who have registered are included in the competition and have the chance to win a prize.

Winner announcement!

And the winners are: Austėja Bersėnaitė and Carole Roskowiński! Congratulations! You won personal career consultation with a recruiter from Oxylabs.

*The winners, please get in touch by emailing support@womengotech.lt.

Tried the challenge from Oxylabs ? Great! Congratulations on completing it! Please take a screenshot of your solution, upload it here, and sign up.

Oxylabs is a market-leading web intelligence collection platform, driven by the highest business, ethics, and compliance standards, enabling companies worldwide to unlock data-driven insights.